Optimizing Software Engineering and Enterprise Data Management

In today’s digital era, data drives every aspect of business and technology. Applications generate, process, and transfer massive amounts of information continuously. Managing this flow efficiently is crucial for performance, compliance, and security. Data flow analysis (DFA) is the practice of systematically examining how data moves through systems, identifying potential bottlenecks, inconsistencies, or vulnerabilities, and optimizing pipelines for reliability and efficiency.

For software engineers, data architects, and enterprise IT teams, understanding data flow is not just a technical exercise — it’s a strategic necessity. Poorly managed data pipelines can result in errors, financial loss, compliance violations, and reputational damage. DFA tools provide the visibility, automation, and intelligence required to maintain clean, secure, and efficient data movement.

This article explores the key benefits of data flow analysis, top tools in the market, comparisons between open-source and enterprise platforms, security considerations, visualization best practices, real-world case studies, and the future of DFA enhanced by AI.

Introduction: The Critical Need for Data Flow Analysis

Consider a large retail bank handling millions of transactions daily across branches, mobile apps, and third-party financial systems. Without proper monitoring, a single failed integration or delayed transaction can propagate errors across reporting systems, causing incorrect analytics, regulatory violations, and customer dissatisfaction.

Data flow analysis tools prevent such issues by:

- Tracking the path of every data element from source to destination

- Identifying inconsistencies, duplicates, and errors

- Monitoring performance and latency

- Ensuring compliance with internal policies and regulations

Key Insight: In industries like finance, healthcare, and e-commerce, DFA is no longer optional. It is essential to maintain operational integrity and secure sensitive information.

Key Benefits of Data Flow Analysis in Software Engineering

Data flow analysis provides tangible benefits for software engineers, data teams, and organizations of all sizes. Here’s an in-depth look:

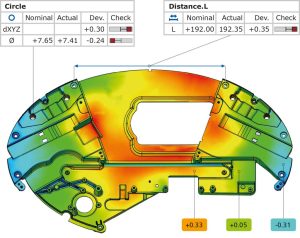

Ensures Data Quality

High-quality data is critical for decision-making. DFA tools allow engineers to:

- Detect inconsistencies, missing values, or duplication across multiple sources

- Validate transformations applied during ETL processes

- Maintain integrity in analytics, reporting, and operational systems

Example: A logistics company discovered discrepancies between warehouse inventory data and ERP reports. Implementing Apache NiFi pipelines allowed real-time monitoring, reducing data errors by 65%.

Enhances System Performance

By analyzing how data moves through systems, engineers can identify bottlenecks, inefficient transformations, and slow queries. Optimization strategies include:

- Parallelizing tasks for high-volume pipelines

- Caching frequently accessed data

- Reducing unnecessary transformations

Example: A SaaS platform reduced pipeline latency from 15 minutes to under 2 minutes after implementing Talend workflows with optimized transformations.

Strengthens Security and Compliance

DFA tools support compliance and risk management by:

- Detecting unauthorized data access

- Logging sensitive data movements for audits

- Enforcing encryption in transit and at rest

Case in point: Healthcare providers using SonarQube for code-level DFA identified potential data leaks in patient information systems, preventing HIPAA violations before deployment.

Simplifies Troubleshooting

When data errors occur, identifying the root cause can be complex without proper tracking. DFA tools provide:

- End-to-end visibility of pipeline execution

- Alerts for failed processes

- Historical logs for debugging

Example: A fintech startup used Azure Data Factory monitoring dashboards to isolate a failing ETL job within minutes, preventing customer-facing reporting errors.

Supports Automation

Modern data pipelines require continuous monitoring and validation. DFA tools enable:

- Automated alerts for anomalies

- Scheduled validation checks

- Self-correcting workflows triggered by data issues

Example: A global retailer automated reconciliation of sales transactions using NiFi, reducing manual auditing time by 80%.

Facilitates Team Collaboration

Data flow analysis provides a shared view of pipelines, improving collaboration between:

- Software developers

- Data engineers

- QA and DevOps teams

Tip: Use visual dashboards to align multiple teams on critical data movements.

Top Data Flow Analysis Tools

Several tools dominate the DFA landscape, each with distinct strengths and target audiences.

Apache NiFi

Overview: An open-source data integration and workflow automation tool.

Key Features:

- Drag-and-drop flow creation

- Real-time monitoring

- Wide range of connectors for databases, APIs, and cloud services

- Security controls: TLS, user authentication, and access policies

Use Cases: Data ingestion, IoT streaming, real-time ETL, log processing

Pros: Scalable, flexible, open-source, supports real-time processing

Cons: Advanced features require technical expertise

Example Implementation: A telecom company used NiFi to manage millions of IoT sensor events daily, achieving real-time analytics while maintaining data quality.

Talend

Overview: Enterprise-grade data integration and ETL platform.

Key Features:

- Visual pipeline designer

- Pre-built connectors for cloud, on-premise, and big data systems

- Data governance and quality modules

- Automation of ETL jobs and scheduling

Use Cases: Enterprise ETL, data warehousing, analytics, data cleansing

Pros: Comprehensive, enterprise support, user-friendly

Cons: Licensing costs; steep learning curve for complex workflows

Example Implementation: A retail chain integrated multiple point-of-sale systems using Talend, achieving consistent reporting across 500+ stores.

SonarQube

Overview: Primarily a static code analysis tool, offering DFA for software security.

Key Features:

- Detects data flow issues and tainted inputs in code

- Integration with CI/CD pipelines

- Automated reporting on vulnerabilities

Use Cases: Preventing SQL injections, insecure data handling, and code-level vulnerabilities

Pros: Free and paid versions; strong community; CI/CD integration

Cons: Limited for enterprise ETL visualization

Example Implementation: A banking software team integrated SonarQube to monitor sensitive data handling, reducing potential security vulnerabilities by 40%.

Azure Data Factory

Overview: Microsoft’s cloud-based ETL and DFA platform.

Key Features:

- Visual workflow designer

- Native Azure service integrations

- Real-time monitoring and alerting

- Scalable, cloud-based execution

Use Cases: Cloud ETL, hybrid pipelines, automated analytics

Pros: Enterprise-grade, scalable, intuitive

Cons: Subscription costs; primarily Azure ecosystem

Example Implementation: A multinational enterprise automated financial reporting pipelines across regions using Azure Data Factory, improving compliance and reducing manual intervention.

Open Source vs Enterprise Tools

| Feature | Open Source | Enterprise |

| Cost | Free | Paid subscription or license |

| Support | Community | Vendor SLA |

| Customization | High | Limited by vendor constraints |

| Security | Depends on setup | Built-in compliance and security |

| Updates | Community-driven | Regular vendor releases |

| Ideal For | Startups, research, internal projects | Large enterprises, regulated industries |

Tip: Open source tools are excellent for learning, experimentation, and early-stage deployments. Enterprises often require robust support, SLAs, and advanced security offered by paid platforms.

Security Considerations in Data Flow Analysis

Security is paramount, especially for sensitive industries:

- Encrypt data in transit and at rest

- Use role-based access controls

- Maintain audit logs for all pipeline activity

- Detect anomalies using automated alerts

- Comply with GDPR, HIPAA, PCI DSS, etc.

- Regularly review pipeline configurations

- Apply patch management and software updates

- Monitor third-party connectors for vulnerabilities

- Segregate environments for development, testing, and production

- Train staff on secure data handling practices

Example: A healthcare provider used DFA combined with SonarQube and NiFi to detect unauthorized access attempts and maintain HIPAA compliance.

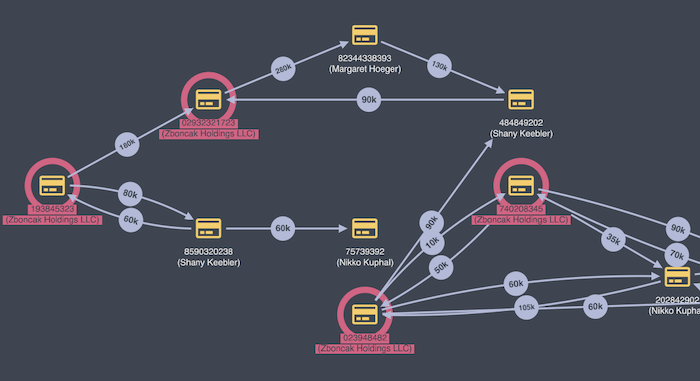

Visualizing Data Pipelines Effectively

Visualization is key for understanding complex pipelines:

- Use graphical interfaces for flow creation

- Apply color-coding to distinguish data types

- Implement real-time dashboards for monitoring

- Utilize hierarchical views to simplify complex pipelines

- Annotate and highlight warnings for team collaboration

Tip: Visualization reduces debugging time and improves team understanding of system behavior.

Case Studies

Case Study 1: Global Bank

Challenge: Inconsistent transaction data leading to reporting errors.

Solution: NiFi pipelines with monitoring dashboards and automated alerts.

Result: Transaction errors reduced by 70%, compliance improved, troubleshooting faster.

Case Study 2: E-Commerce Enterprise

Challenge: Multiple systems feeding analytics with varying data formats.

Solution: Talend pipelines for ETL, data cleaning, and integration.

Result: Unified analytics data, faster reporting, improved data quality.

Future of Data Flow Analysis with AI

AI is revolutionizing DFA by providing:

- Predictive monitoring of pipelines

- Automated anomaly detection

- Optimization of workflow execution

- Intelligent data validation

- Natural language queries for analyzing pipeline performance

Example: AI-driven DFA tools can predict potential bottlenecks before they affect users, reducing downtime and improving SLA adherence.

Conclusion and Recommendations

Data flow analysis tools are essential for modern software engineering and enterprise data management. Key takeaways:

- Ensure data quality, performance, and security

- Choose tools aligned with organization size and complexity

- Implement visual monitoring and automated alerts

- Combine AI capabilities for predictive and proactive data management

- Integrate DFA with CI/CD pipelines for automation